Introduction

Artificial Intelligence (AI) systems are increasingly deployed in safety-critical environments such as autonomous vehicles, healthcare, industrial automation, cybersecurity and importantly control of power grids. A prominent challenge in these domains is the non-deterministic nature of many AI models, which introduce unique risks and considerations for safety, reliability, and trustworthiness.

Data and discussion produced by the International Energy Agency related to the growth of AI patents associated with smart grids and energy system control is followed by a research report prepared by corpora.ai , ‘Non-deterministic AI safety-critical systems’, which is also available as a downloadable PDF with full citation details.

The availablity of a range of digital technologies, as well as continuous improvements to their costs and

performance, triggered initial interest in the concept of smart grids in the early 2000s and underpinned its subsequent move into the mainstream of electricity grid planning. The range of technologies includes:

— data management platforms

— data transmission and reception devices, systems and protocols, including wireless communication

— cloud data storage, plus tools for writing and recalling information

— cybersecurity, including encryption, blockchain and firewalls

— AI, based on machine learning, neural networks and other advances

These techniques have applicability across a number of the challenges and technology areas associated with a set of patents that relate to the general use of these digital enabling technologies to electricity grids specifically, highlighted here.

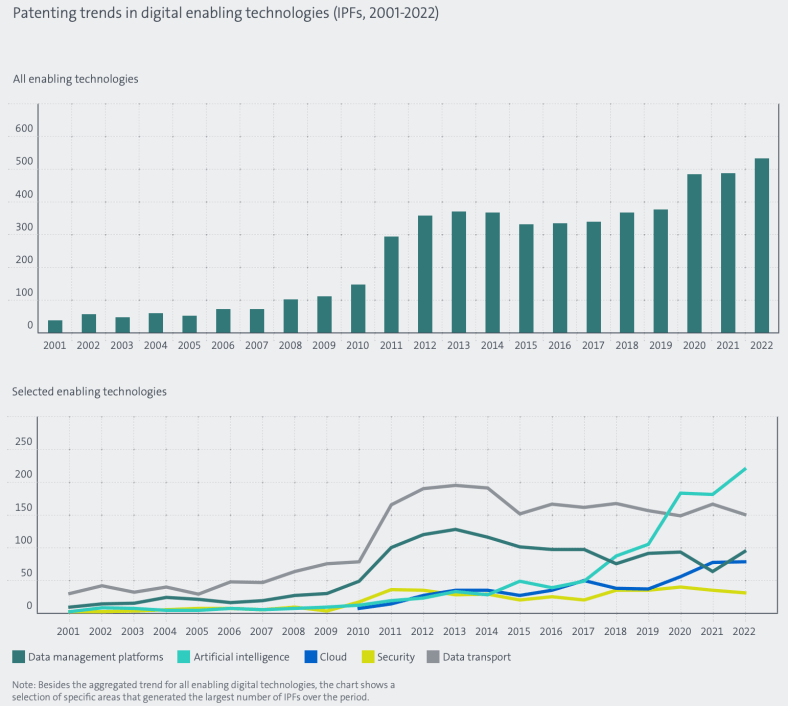

Patenting activity in digital enabling technologies for electricity grid applications has grown significantly

since 2005 and steadily risen at an average annual growth rate of 15% to a new peak of over 500 IPFs in

2022

Acknowledgement: IEA and EPO, 2024 “Patents for enhanced electricity grids – A global trend analysis of innovation in physical and smart grids”, Licence: CC BY 4.0

Data transport-related IPFs, which were the largest single category of enabling digital technology IPFs over the period 2011-2022, targeted a range of different smart grid technology areas, with micro-grids and storage controls the largest among these. Japan, the EU27 and the US contributed in roughly equal proportions to patenting activities in data transport, each with 20-25% of all IPFs over the period 2011-2022. Innovation in data management platforms has a similarly cross-cutting nature. It is especially frequently present in micro-grids (targeted by 22% of IPFs relateto data management platforms) and forecasting and decision technologies (21%). Patenting activity in data management platforms has been mainly led by Japan, which contributed 38% of IPFs in that field over the period 2011-2022.

AI patenting, on the other hand, has to date been more concentrated. The main area of AI-related IPFs are those supporting forecast and decision, a category that boasts 39% of AI-related IPFs and drove rapid growth in AI-related electricity grid patenting from 2000 to 2022. AI is nonetheless applied in patents related to other areas of smart grids as well, in particular micro-grids and outage management. The US and China are the main patenting regions for these, with 24% and 23% of AI-related IPFs respectively, followed by the EU27 countries with 18%.

IPFs relating to cybersecurity respond to the challenge of protecting data for an increasingly interconnected electricity grid that communicates with several magnitudes more devices than it did just a decade ago, including appliances in people’s homes. To date, cybersecurity enabling digital technologies are most associated with micro-grids and forecast and decision applications. It is noticeable that there is curently relatively litte patenting in the area of cybersecurity in relation to the integration of stationary storage, remote operation of power plants and demand response. These may be areas that governments would wish to explore to see if innovation is well-matched with the level of expected risk.

Non-deterministic AI models, characterized by inherent variability in outputs even under identical inputs, present significant challenges for safety-critical systems that demand consistency, predictability, and interpretability. These models—ranging from large language models (LLMs) to generative AI and neuromorphic systems—introduce unpredictability that complicates validation, verification, and safety assurance processes vital in domains such as healthcare, autonomous vehicles, industrial control, and cybersecurity.

Core Challenges of Non-deterministic AI in Safety-critical Systems

Variability and Unpredictability

- Output Variability: Many AI systems can exhibit stochastic sampling, leading to different results from identical inputs, which undermines reliability and complicates safety validation. Generative models produce unpredictable quality and performance dependent on data, parameters, and context, posing significant safety risks.

- Impact on System Performance: Variability can cause unpredictable workload patterns and system responses, affecting overall reliability and operational safety. For instance, AI agents drawing from diverse data sources can adapt dynamically but risk unintended behaviours.

Verification and Validation Difficulties

- Limited Traditional Metrics: Standard evaluation frameworks such as RCTs or cohort studies are insufficient for non-deterministic models; novel assessment tools focusing on probabilistic safety margins, robustness, and transparency are necessary.

- Repeated Testing: Repeated testing under identical inputs is critical for assessing output sufficiency and reliability, although it does not eliminate the fundamental unpredictability.

Safety and Security Vulnerabilities

- Hallucinations and False Outputs: Non-determinism increases the risk of hallucinations, which can introduce safety hazards and security vulnerabilities that are challenging to mitigate.

- Data Security and Privacy: The proliferation of AI agents across enterprise and safety-critical systems elevates risks related to data breaches, unauthorized access, and non-deterministic access patterns that challenge traditional security paradigms.

Regulatory and Ethical Concerns

- Lack of Formal Standards: Formalised risk assessment frameworks specific to non-deterministic AI are scarce, creating gaps in safety assurance and regulatory compliance. Efforts such as AI standards development by Chinese authorities and international bodies aim to address these gaps.

- Ethical Risks: The unpredictable nature of AI outputs raises concerns about bias, transparency, and accountability, especially in medical, legal, and autonomous systems.

Approaches for Managing Non-determinism

Architectural and Technical Strategies

- Deterministic Mediation Layers: Incorporating deterministic software layers that mediate AI data access and control can help manage unpredictability, especially in enterprise environments.

- Routing and Replication: Query routing to replica sets or dedicated search nodes enhances response stability and mitigates variability impacts.

- Error Correction and Data Validation: Embedding error-correcting codes (ECC) in hardware or software architecture improves data integrity and system robustness in safety-critical applications.

Monitoring and Testing

- Vigilant Observability: Advanced monitoring solutions, such as Datadog’s LLM monitoring, facilitate detection of anomalous behaviors and hallucinations, supporting continuous safety oversight.

- Simulation and Scenario Testing: Developing virtual environments and safety-critical scenario testing, especially for autonomous vehicles, helps evaluate responses under complex, unpredictable conditions.

Governance and Standards

- Safety Frameworks: Adoption of comprehensive safety and risk assessment standards such as ISO 31000, IEC 62443, and specific AI safety guidelines ensures structured risk management.

- Risk Thresholds and Autonomy Limits: Establishing clear thresholds for system autonomy and implementing continuous monitoring mechanisms are vital to mitigate unforeseen behaviors.

Emerging Solutions and Future Directions

- Safety-Enhanced AI Architectures: Integration of safety guardrails, probabilistic reward models, and positive reinforcement mechanisms can incentivize safe behaviors even amid non-determinism.

- Regulatory Evolution: International efforts, including AI standards committees and regulatory bodies (e.g., AI Act, Chinese Ministry of Industry and IT), are developing frameworks to govern non-deterministic AI deployment effectively.

- Universal Ethical Frameworks: Mapping universal human values to concrete AI safety definitions enhances robustness and aligns AI behaviors with fundamental ethical principles, reducing risks from unpredictable outputs.